GPT 5.2 Nailed My Plant Care Question, Then Got Fooled by a Moose

I had a trailing vine plant sitting on top of a cabinet in my house and wanted to know if I should do anything with it. Simple question. But I also had an ulterior motive: I wanted to see if ChatGPT could match a specialized plant identification app.

Tool: ChatGPT (GPT 5.2) Where to find it: chat.openai.com What it costs: Free tier available; Plus subscription $20/month

The Experiment

I had a trailing vine plant sitting on top of a cabinet in my house and wanted to know if I should do anything with it. Simple question. But I also had an ulterior motive: I've been using the Picture This app (https://www.picturethisai.com/) for several years to help keep my houseplants healthy. I wanted to see if ChatGPT could match a specialized plant identification app.

I snapped a photo and uploaded it to GPT 5.2 with the prompt: "Should I do something with this?"

I kept the prompt intentionally vague. I wanted to see if GPT would pick up on the long trailing vines and suggest trimming or propagating, maybe even recommending I gift cuttings to neighbors. I didn't specify that I was asking about the plant, so technically the model was trying to be helpful by addressing everything it could see in the image.

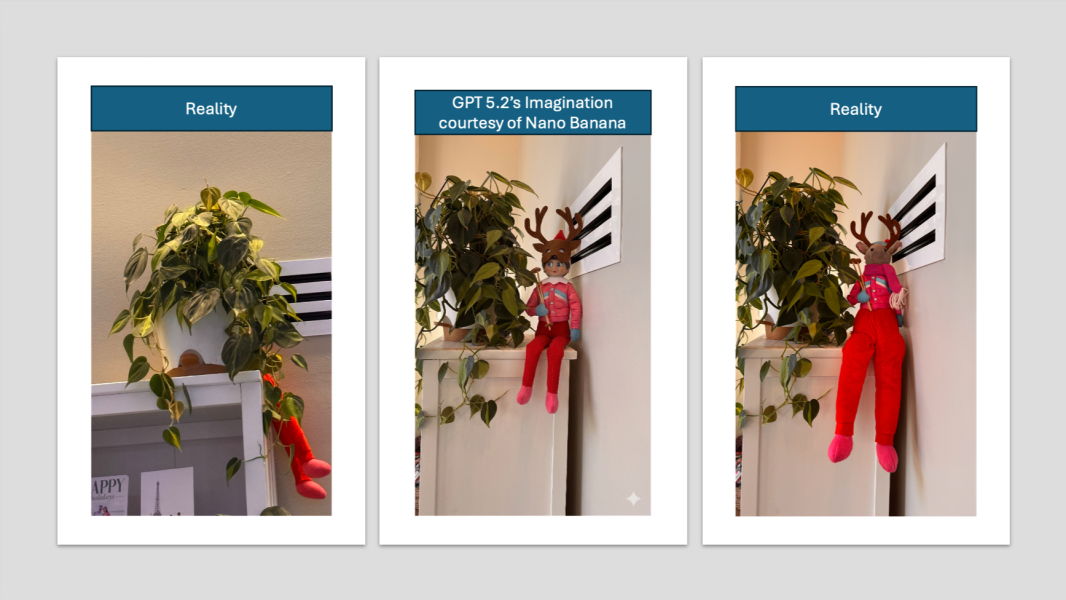

The photo included the plant in a white pot, cascading down the side of the cabinet. It also happened to capture the leg of a decorative moose wearing ski clothes that was hanging nearby as part of our holiday decor. Yes, another post that is sort of about a moose (See: Nano Banana Pro Context, Cartoons, and Guardrails.)

What GPT 5.2 Got Right

The plant identification was spot on. GPT 5.2 correctly identified it as a heartleaf philodendron (likely the 'Brasil' variety based on the variegation pattern) and delivered genuinely useful care advice. It suggested trimming and propagating the long vines, rotating the pot to encourage even growth, and checking that the pot was stable since the vines were pulling downward. All solid recommendations that I also got from my Picture This plant app.

The model even noted that philodendron cuttings root easily in water, which is true and practical. If you want more plants, just snip and drop in a jar.

What GPT 5.2 Got Confidently Wrong

Then came the fifth point in its response: "Elf-on-the-Shelf situation (optional, but cute)."

The model saw the red legs of our decorative moose in ski clothes and confidently identified it as an Elf on the Shelf. It even added a little joke: "If you don't trip the vines, your elf might try to rappel down the cabinet."

The moose was partially obscured by the plant, so all that was visible were red legs hanging down. During the holiday season, red legs plus partial visibility apparently equals Elf on the Shelf in the model's interpretation. Rather than expressing uncertainty about what the object was, GPT 5.2 committed to the identification and ran with it.

Learnings and Observations

Vague prompts invite comprehensive (and sometimes wrong) answers. I asked "should I do something with this?" without specifying what "this" was. The model did exactly what you'd want a helpful assistant to do: it addressed everything it could identify. The lesson here isn't that GPT 5.2 failed, it's that vague prompts give AI room to make assumptions.

AI vision models make contextual assumptions. The holiday season timing, the red color, and the partial visibility all pointed toward a reasonable guess. The problem isn't that the model made an inference. The problem is that it presented that inference with the same confidence as factual plant identification.

Partial information led to pattern matching, not uncertainty. When humans see something partially obscured, we often acknowledge we're not sure what it is. AI models tend to pick the most statistically likely match and present it as fact. There was no "this might be" or "I can't quite tell" in the response.

Comprehensive responses can include confident errors. The model was clearly trying to be thorough and helpful by addressing everything in the image. That helpfulness instinct led it to make a call on something it should have probably left alone or flagged as uncertain.

The plant advice was legitimately good. This experiment shows that AI image analysis can be highly useful and completely off-base in the same response. The philodendron identification and care tips were accurate and actionable. The object recognition failure happened in the same breath.

General AI models are catching up to specialized apps. The plant identification and care advice matched what I would have gotten from Picture This, an app I've relied on for years. For basic plant care questions, a general model like ChatGPT might be good enough, especially if you're already paying for a subscription. That said, dedicated plant apps still offer features like disease diagnosis, watering schedules, and plant journals that general AI doesn't replicate.

Always verify the parts that matter to you. If I had been asking specifically about the holiday decoration, I would have gotten bad information. Since I was asking about the plant, the response was helpful despite the moose-to-elf mixup. Knowing which parts of an AI response to trust requires understanding what the model is likely to get right versus where it might be guessing.

What happens when AI uses what it sees to sell to us? This experiment got me thinking about the future. If AI models are already scanning images and making assumptions about what's in our homes, how long before that capability gets used for marketing? Imagine uploading a photo for plant advice and getting suggestions to buy a new pot, fertilizer, or a plant stand. Or the model noticing your holiday decor and recommending gifts. The line between helpful observation and targeted advertising could get very blurry, very fast.

Today's Questions

Have you ever gotten a response from an AI that was partially brilliant and partially wrong in the same answer? How do you decide which parts to trust? And what happens to trust when brands and models start to subtly sell to you in conversations?