Quick Take: Knowing the Risks Matters — A Reminder from the AI Safety Index

This week, I dug into the latest AI safety pulse check: the Future of Life Institute's "AI Safety Index: Winter 2025." It reviews eight leading AI companies across six critical domains, from risk asse...

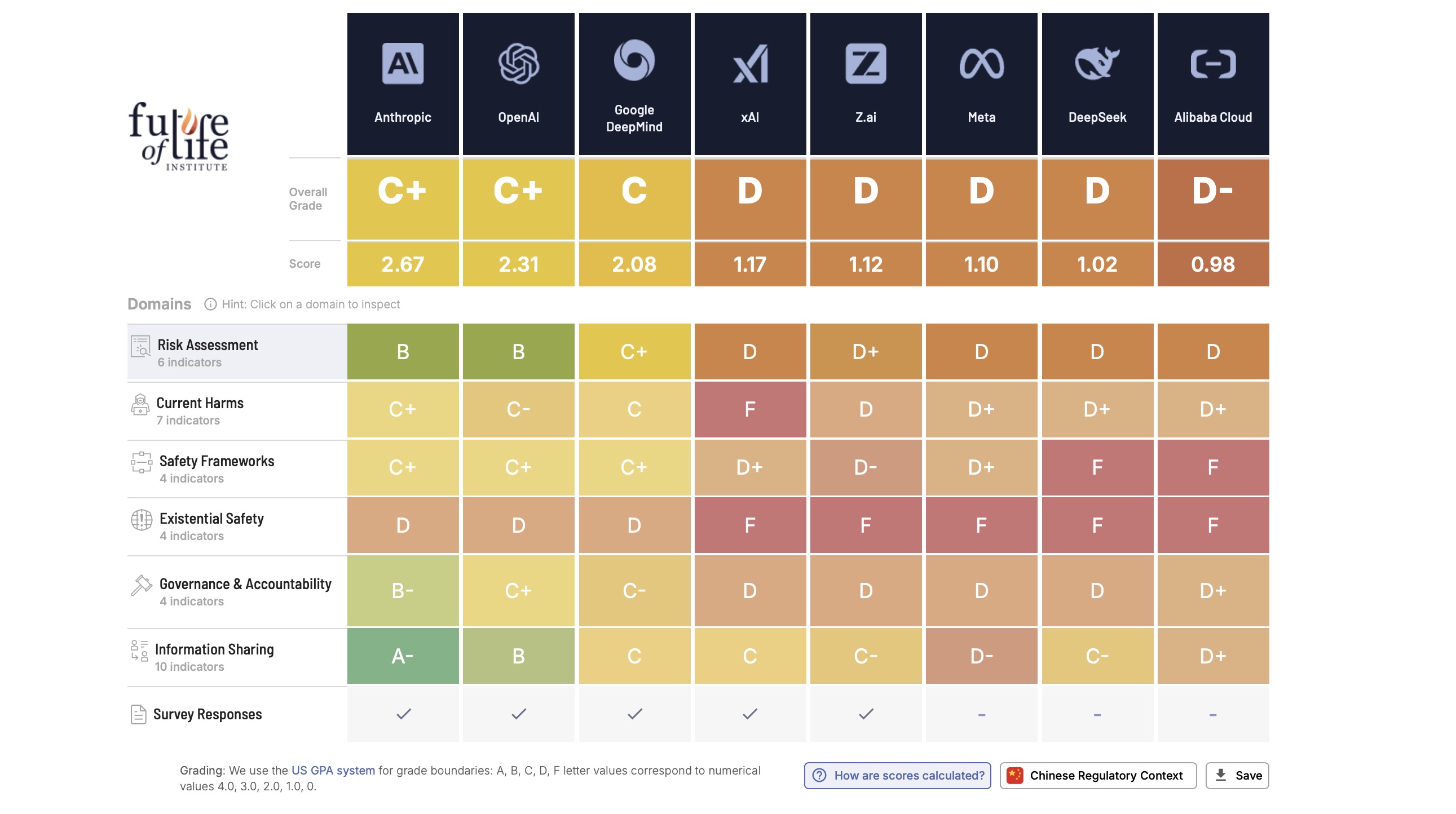

This week, I dug into the latest AI safety pulse check: the Future of Life Institute's "AI Safety Index: Winter 2025." It reviews eight leading AI companies across six critical domains, from risk assessment and governance to transparency and long-term "existential safety."

What jumped out? None of the evaluated firms scored a clean bill of health. Even the top performers only hit around a C or C+ on the safety scoreboard. Meanwhile, many companies still lack credible plans for how to safely manage (or even control) future ultra-powerful AI systems.

That matters for all of us, even if you're like me and are eager to experiment with new technology and test the limits. Because here's the reality: these tools are not risk-free. The faster AI evolves, the bigger the stakes become.

What This Means For Anyone Using GenAI (Including Me)

No tool is "innocent" and even "free" has a cost.

Remember: If it's free, you're the product. Even those popular, publicly available models and chatbots we all use? They're built by companies with significant safety gaps.

Understanding risk equals smarter use. Just because AI can help doesn't mean it should always be used blindly. Knowing what can go wrong helps me decide when to lean on AI versus when to rely on human judgment.

Safety isn't automatic. It requires vigilance. As capabilities grow, so do potential harms. We're not guaranteed safety simply because the AI "works."

Your use matters. Even small, everyday interactions (deciding on wine, writing a note, planning a trip) sit on a spectrum that leads all the way up to existential questions if we get complacent.

My Lesson: Experiment, But Know What You're Trying and What You're Betting

I started Daily(ish) GenAI Experiments with pure enthusiasm: cool inputs lead to helpful outputs, and I learned something. The FLI Safety Index reminded me that behind every helpful AI prompt, there's a whole infrastructure of trade-offs, unseen development choices, and uncertain long-term risks.

I'm not saying don't experiment. I am saying: let's experiment with awareness. For me, that means keep asking "why" and "what if," keep being thoughtful about when I defer to AI, and maybe, every now and then, choose human intuition instead.

Because the value of GenAI doesn't come just from convenience. Its real test is whether it helps us make better choices without losing sight of what's at stake.

Read the full AI Safety Index at https://futureoflife.org/ai-safety-index-winter-2025/?utm_source=chatgpt.com

Tools Used

N/A